In the past many national weather service organisations approached the challenge of understanding and forecasting the weather with a strategy of having as much data as possible, regardless of quality by monitoring the weather with as many data points as possible to be able to better understand the weather and then forecast it, however, many of the stations deployed had a lower standard of quality and additionally, the data was only provided at certain times during the day due to limitations such as power and cost. While this did allow users to find some basic, large scale patterns in the data and weather which were then analysed to produce models showing how these patterns develop and change and thereby allowing those national weather services to forecast the weather with some degree of accuracy, trying to improve the quality of the forecasts, especially in smaller regional scales has proved much more difficult than anticipated as the relationship of having a higher density of stations did not lead to better quality forecasts after a certain critical threshold had been reached.

Today, a tiered approach to data quality and coverage, with the data available as needed (and not simply collected all the time) has proven to help not only improve the quality of the forecasts but also to better understand the nature of any extreme weather event that can have a major impact on life quality and an increasingly finite natural resource pool. Organisations are also increasingly moving away from trying to determine the percentage chance of an extreme event occurring, instead focussing on underlining the impact that this extreme event may have should it come to pass so that measures can be taken to mitigate the impact based on a combination of factors rather than simply trying to determine the percentage chance it will occur. This intelligent approach to the overall data collection, network size and distribution as well as risk mitigation strategy provides a much better utilisation of resource, time and money for all involved.

Examples of why this change in strategy is critical to our modern world and increasing population is the global shift of people moving to urban centres away from rural landscapes, so that any extreme event will have a significantly higher impact on both those densely populated centres as well as the remaining rural landscape used for agriculture or other infrastructure to sustain the increasing population. A few examples to illustrate how important it is to not only be able to measure and forecast the weather accurately but also to understand the impacts extreme events may have on the regional, continental or even global scale follow:

The most expensive weather disaster in recent South American history occurred in 2018, where the drought in Uruguay and Argentina has cost the economy an estimated $ 3.9 Billion (US Dollars) and the worst harvest for Argentina since 2009 along the most expensive disaster in history for these two countries, which also affected the availability of specific foodstuffs globally. Another example is the Cold weather in July (2018) where temperatures in Argentina and Paraguay were at least 20 degrees Celsius below average for that month, significantly affecting crop growth and harvest. Yet another example came to pass in 2016 where Brazil had an abnormal climate situation in the otherwise dry summer season which not only saw heavy convective precipitation events but also Tornadoes and severe damage to buildings, bridges and other infrastructure.

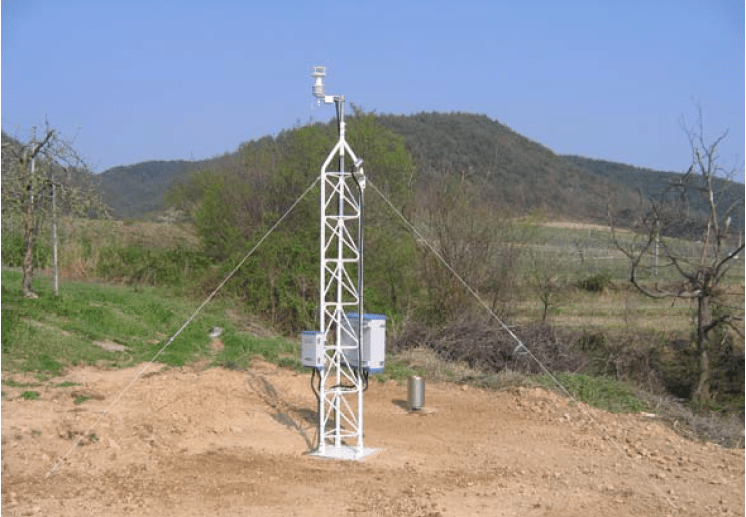

With the increasing agreement to utilise a conditions based decision management system based on forecasted weather conditions or potential extreme events it is essential to have infrastructure to support this concept, such as using and analysing appropriate quality data only when needed and where needed, from an always ready pool of tiered, quality vetted meteorological stations. As all users of meteorological data now seem to move towards this goal, it is absolutely vital to have an appropriate level of confidence in the quality of the stations being used in this tiered approach and for this information to be available to anyone using that data, which has led to a move towards better documentation and metadata for such stations and for increasingly intelligent reference quality or multi-parameter, adaptive weather instrumentation with on-board quality control monitoring as a second-tier quality approach to their network that is now also getting World Meteorological Organisation (WMO) backing with guidelines for users now being produced.

As the market shifts to this new approach, some meteorological instrument manufacturers are also evolving and following the trend to an as needed tiered-quality approach while others have helped to shape and lead the way together with the scientific community. Originally, there were many different quality levels of products and value was seen in all of them as long as there was ‘a’ measurement and it was seen as helping to contribute towards the quality of the forecast. As demonstrated above regarding how the approach to forecasting has changed, the same is true of the approach to the instrumentation used in such networks, whether large or small in that this approach no longer holds true with the approach now being that it is the quality of the data, not the amount of the data that is important to getting better regional or large scale weather prediction correct.

In this changing landscape (both in terms of forecasting and instrumentation), it is important to have a defensible position when using a measurement from the internet or secondary network (especially when these networks or stations are not owned and managed by the user in question) as the cost of a wrong decision is increasingly dramatic for all involved parties.

Instrument manufacturers that work with the scientific community and understand the nature of the changing strategy are better placed and have better knowledge from past experience to adapt products where necessary to meet the new requirements and reduced infrastructure strategy. Together the end users of the measurements and the manufacturers of the products are increasingly working together to adapt and produce the right measurement at the right time with the right coverage to allow the best decisions to be made regarding changing conditions and risk mitigation in extreme events as they happen.